Introduction

With the penetration of the Internet through all parts of the world, the amount of data generated every day is enormous. According to a report by ExplodingTopics, as of 2nd August 2023, the amount of data generated every day averages 328.77 million Gigabytes!! Which is equal to 66,000 million songs. These large quantities of data are referred to as Big Data. Ever since its advent, it has left businesses and industries with the challenging task of storing and harnessing value out of it. In this blog, I will be sharing some fundamental terminologies related to big data.

What is Big Data and its impact on businesses

Big data can be defined as vast amounts of high-volume, high-velocity, and dynamic data generated by users and machines. These data are left behind as traces and can be used for analysis to extract valuable insights and patterns. Using tools like Apache Hadoop, and data science, these data can be analyzed and massive value can be extracted from them.

Big data is widely being used by Amazon, Walmart, and Facebook to name a few, in their recommendation and search engines. For example, Walmart introduced their search engine called Polaris which they used to provide users with customized search results, understand customer preferences and optimize assortment and inventory management and they witnessed a 10-15% increase in shoppers completing a purchase after searching for a product using the new search engine.

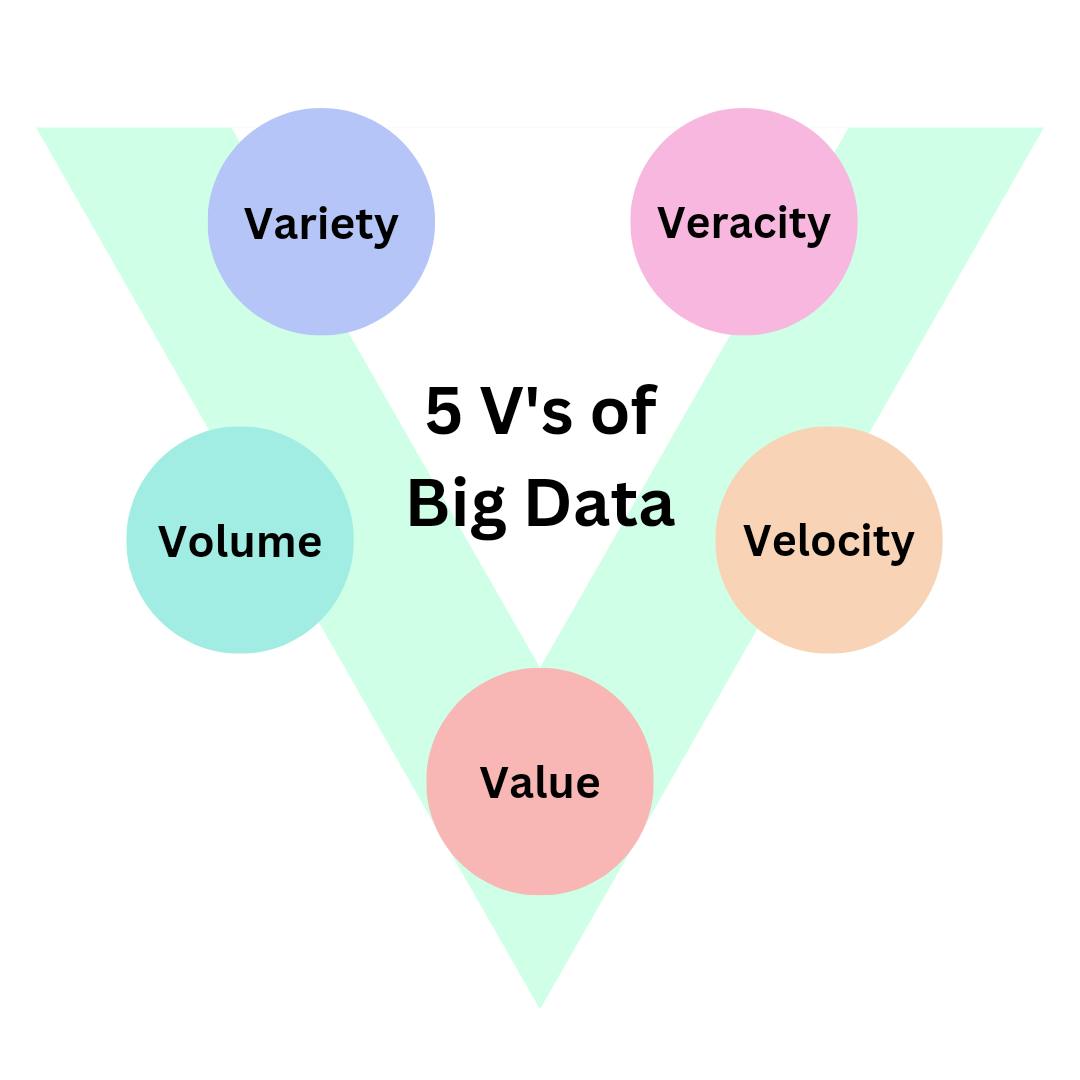

5 V’s of Big data

The 5 V’s of big data is a popular concept used to represent the characteristics of big data and how unique it is from traditional data. It includes:

Volume: Refers to the size of data generated and collected. Big data usually ranges in petabytes and beyond.

Velocity: Refers to the speed at which data is generated, collected and processed. It is often generated by users online, sensors and is real-time.

Variety: Refers to types of data generated and its different formats. These can include text, images, JSON, XML etc.

Veracity: Refers to the accuracy and quality of data. It is one of the important V’s as it ensures the extraction of reliable information.

Value: Refers to the insights and knowledge that can businesses can obtain through analyzing the data.

Forms of Big Data:

Big data is of 3 forms:

Structured Data: Highly organized and formatted data which follows a pre-defined schema. Stored in the form of Tables, Spreadsheets and Databases. Can be accessed through querying using SQL.

Unstructured data: Data of different formats like text, images, video etc. which lacks organization or a predefined schema.

Semi-Structured Data: This type does not have a strict tabular structure but has some amount of organization. Includes formats like JSON, XML and key-value stores.

How to make sense of Big Data?

To extract meaningful information and obtain maximum value from the big data that we analyze, this 6-step framework can be utilized.

Step 1 - Determine the problem

Defining the objective of the project, and the business problem addressed through the analysis.

Step 2 - Collecting data

Obtaining relevant data to carry out the analysis, considering important factors like privacy.

Step 3 - Explore the data

Recognizing trends and patterns in the data and visualization using graphs and visual media.

Step 4 - Analyze the data

This step involves building a model. The modelling for big data analysis is, however, different compared to that for data science. The model varies due to the complexity and the volume of the data. The data is fit into the model and validation is done.

Step 5 - Storytelling and Communication

In this step, the results of the analysis are evaluated. All valid conclusions from the analysis are considered for the next step.

Step 6 - Taking action

Decisions are made based on the analysis conducted.

Use Cases of Big Data:

Exploration: Mining and exploring data from different sources to improve decision-making.

Enhanced 360-degree customer view: Getting a complete idea of customer behaviour to drive better engagement and revenue.

Security and Intelligence extension: Processing and analyzing new forms of data such as social media posts to understand security and improve intelligence.

Operation analysis: Obtaining an idea of the machine-generated data. Includes GPS, other signal data and real-time data streamed from sensors.

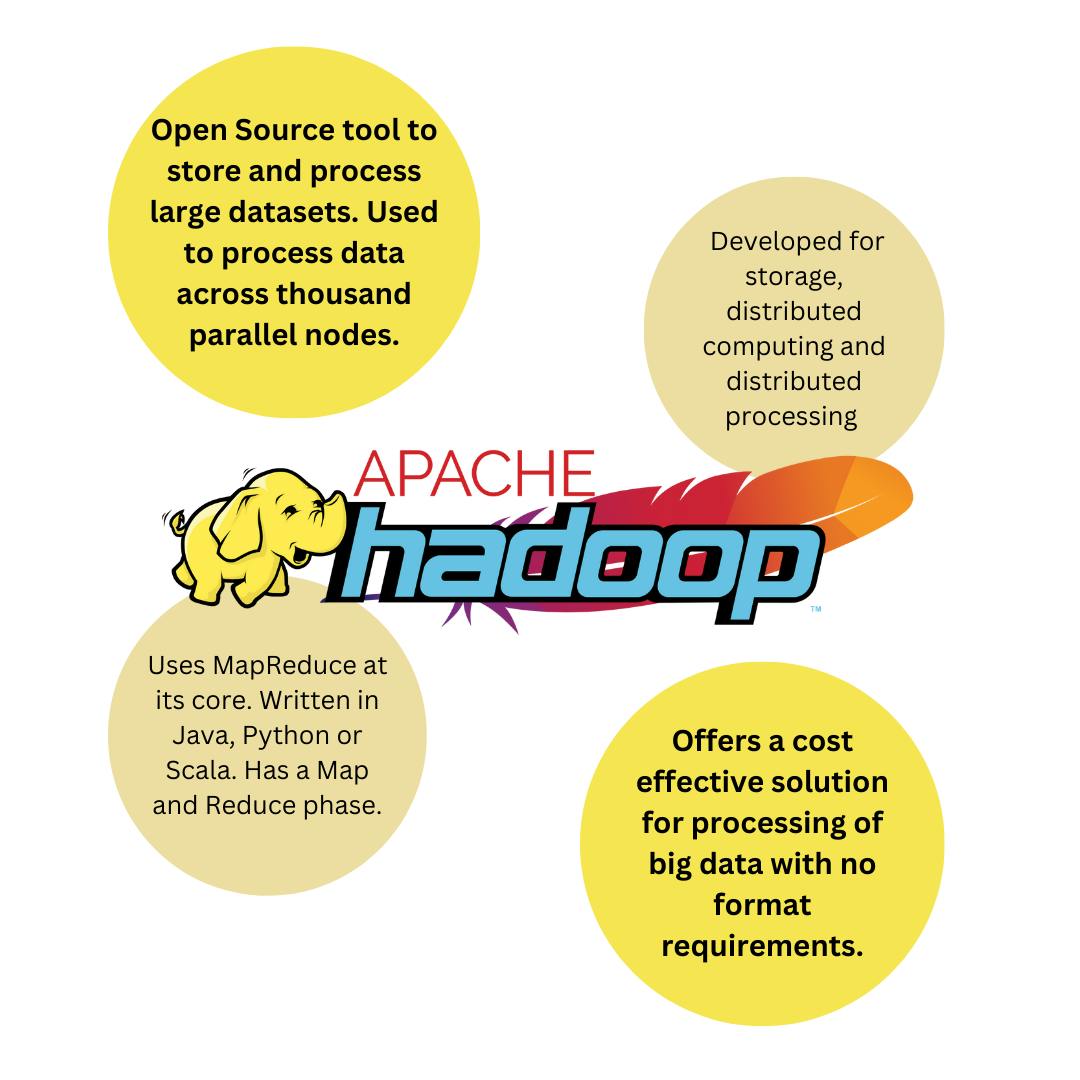

Hadoop

Apache Hadoop is an Open source framework to store and process big data. It provides a cost-effective storage solution for large volumes of data with no format requirements. It uses MapReduce-related code, written in Java, that helps in data processing.

The HDFS - Hadoop Distributed File System provides a way to store data across a cluster of computers in a distributed format. It allows data to be replicated at any node of a cluster ensuring high availability of data.

Outro

I hope I was able to give a basic understanding of Big data and the relevant terminologies. Feel free to give me your feedback and follow for more such blogs.

Images conceptualized and designed by Athithya.